Do you have an accent, or do other people?

We all do! There are an amazing number of different sounds that humans use for expressing the same words, and variations are driven by dialect, custom, emotion, and how a word is being used. Dealing with all these variations appropriately is one of the hard problems faced by all voice platforms.

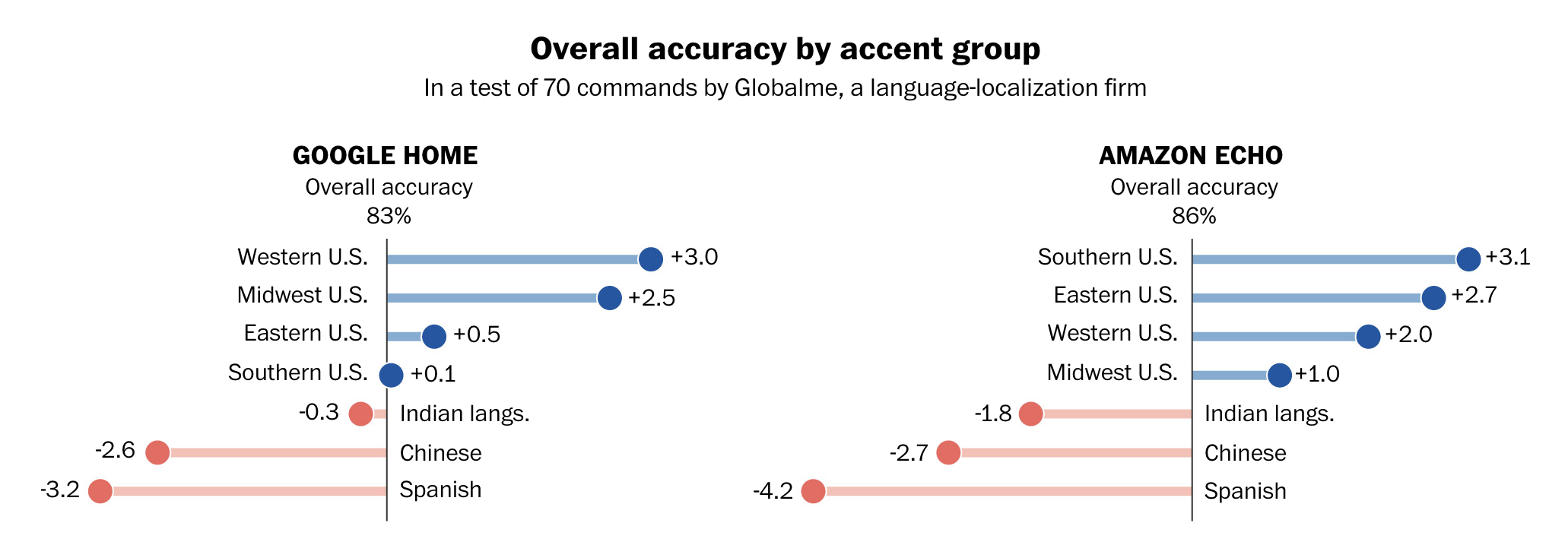

How accurately a computer can convert sounds into words has advanced enormously in the past few years, and these advances are part of the essential foundation underpinning the growth of the voice ecosystem. However, while strides are being made on all fronts, some accents may be at a disadvantage relative to others, and this may hurt voice device adoption within some demographics.

Last March, Drew Harwell, a reporter for The Washington Post, was interested in investigating this issue, and he reached out to us at Pulse Labs for help designing and implementing a test to study it. We designed a test comparing how well Alexa understood three Washington Post headlines read by three different linguistic groups.

These headlines were:

“As Winter Games close, team USA falls short of expectations”

“China proposes removal of two-term limit, potentially paving way for Xi to remain president.”

“Trump bulldozed Fox News host, showing again why he likes phone interviews.”

Drew presented the findings in his excellent Washington Post article. Our friend Julia Silge helped out with the technical analysis, and she shares the in-depth methodological details on her blog.

The basic finding is that Alexa understands speakers with accents considered neutral or from English speaking regions (like the American South, Brooklyn, or London), better than people with accents from non-English speaking regions (like France or Japan).

Our personal favorite misinterpretation was:

“trump bull diced a fox news heist showing again why he likes pain and beads”

It might be hard to reconcile the sentence above with the actual headline, but this illustrates the challenge of phoneme identification across a broad spectrum of speakers. Add in factors like background noise and acoustic variation, and it becomes clear what a hard problem this is. The progress voice platforms have made is quite impressive, and there will be more progress over time. As the voice platform continue collecting data, their speech to text capabilities for all people and all accents will improve.

However, no matter how good the voice platforms get at correctly converting speech into text, there will always be times when what a user said either isn’t understood, or is misunderstood; and dealing gracefully with these situations is a difficult, but critical, aspect of good voice user interface design. In our next blog post, we’ll dive deeper into this problem, and discuss some of the best practices for dealing with it.